Introduction

Quick-commerce platforms like Blinkit have transformed how consumers shop for groceries and daily essentials. With real-time inventory updates, hyperlocal pricing, and rapid delivery promises, Blinkit generates a massive volume of high-value product data every minute.

For brands, retailers, price intelligence firms, data analytics companies, and investors, this data is a goldmine. However, Blinkit does not provide a public API for structured access to product listings, prices, discounts, availability, or delivery timelines.

This is where a Blinkit Product Data API Integration becomes critical.

In this detailed guide, we walk through how to design, build, and deploy a scalable Blinkit Product Data API—from understanding Blinkit’s data structure to scraping, normalization, and real-time delivery via APIs.

Why Build a Blinkit Product Data API?

Before diving into the technical steps, let’s understand why businesses invest in Blinkit data APIs.

Key Business Use Cases

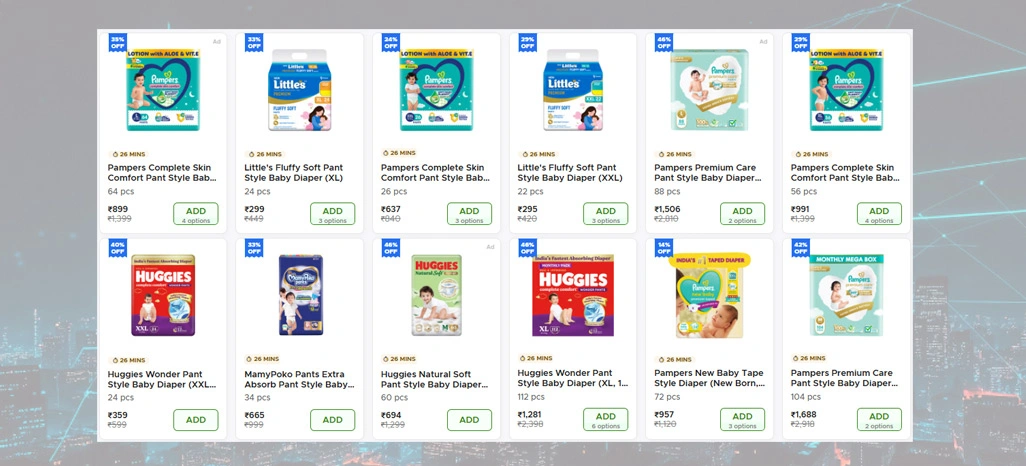

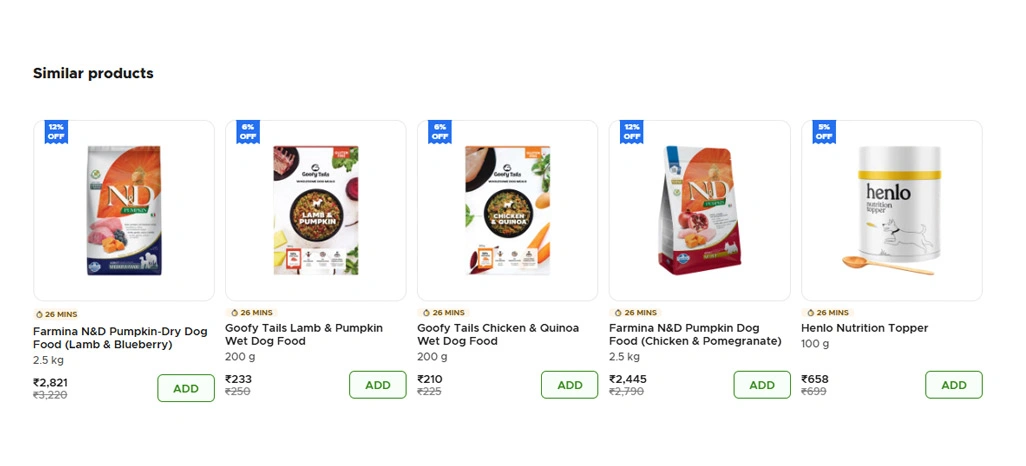

- Retail Price Monitoring & Comparison

- Dynamic Pricing Intelligence

- Stock & Availability Tracking

- Discount & Offer Analysis

- Private Label Competitive Benchmarking

- Quick-Commerce Market Research

- Demand Forecasting & SKU Performance Analysis

A well-built API allows teams to consume Blinkit data programmatically, integrate it into dashboards, ERP systems, BI tools, or AI pricing engines.

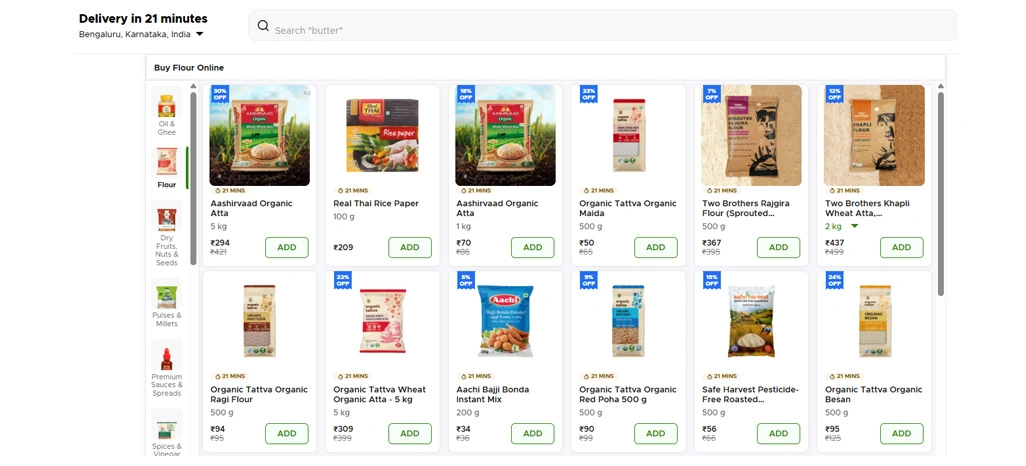

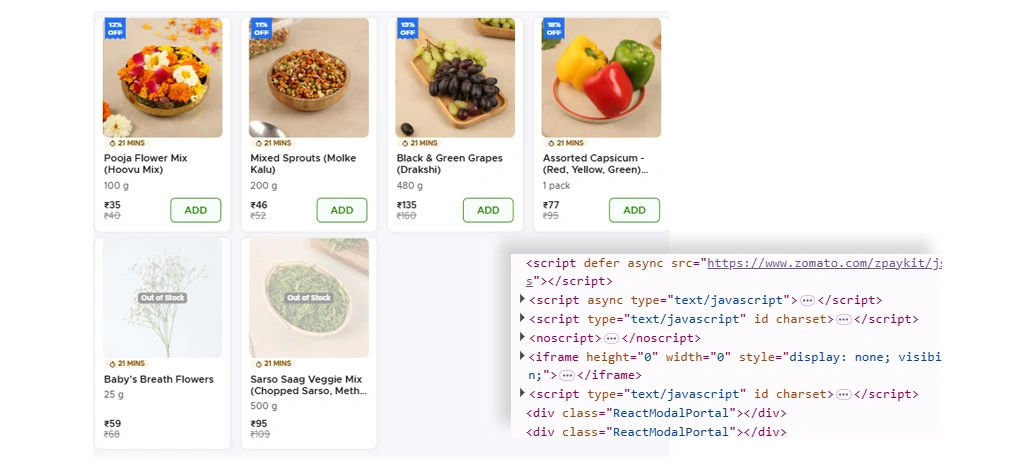

Understanding Blinkit’s Product Data Ecosystem

Core Data Entities on Blinkit

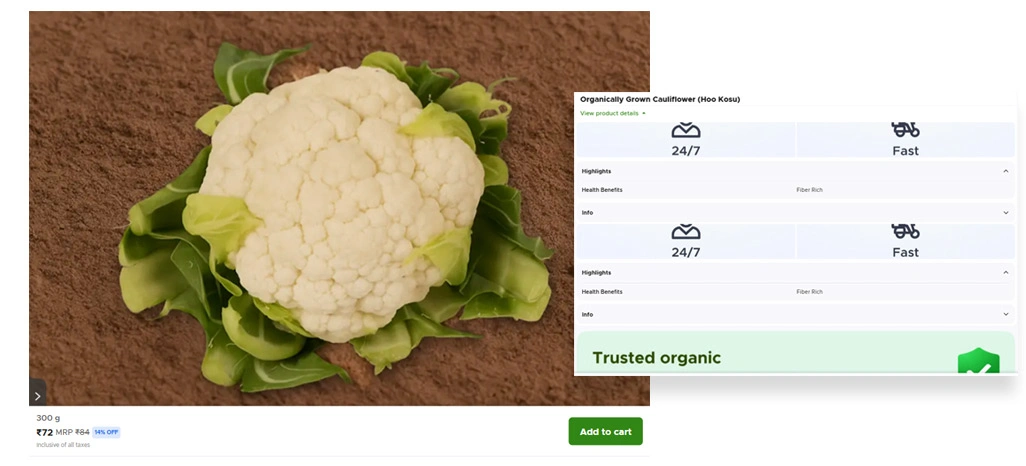

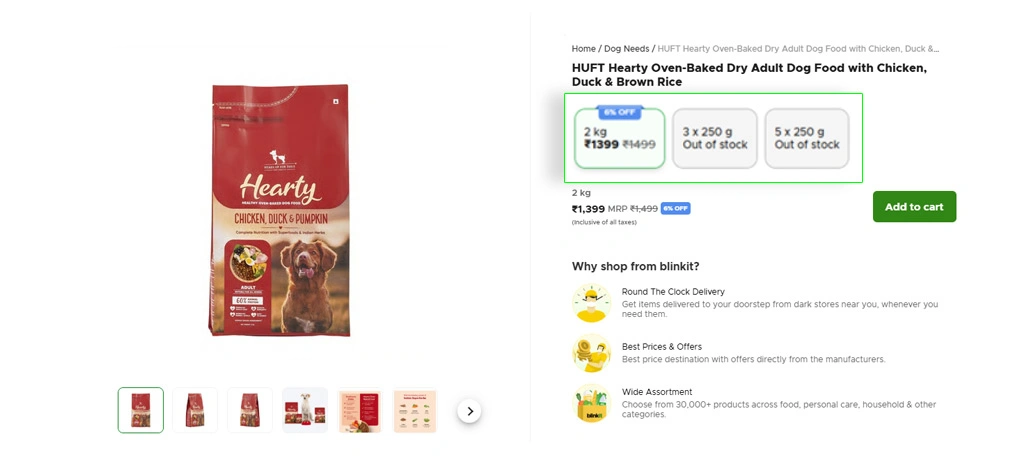

A Blinkit product listing typically includes:

- Product Name

- Brand

- Category & Subcategory

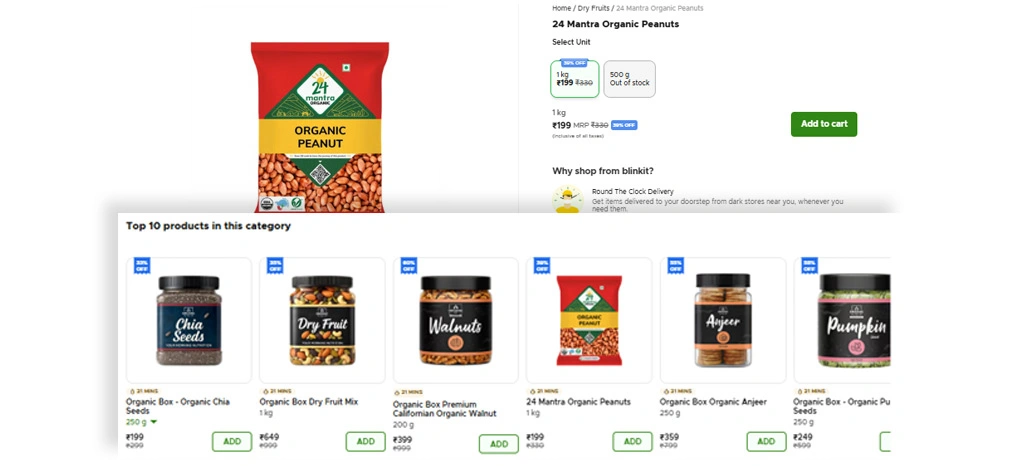

- SKU / Variant (weight, size, unit)

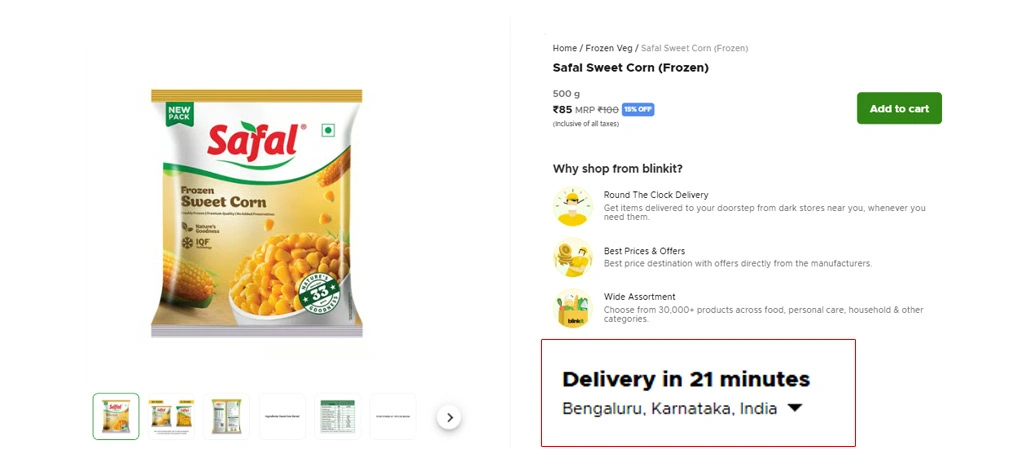

- MRP

- Selling Price

- Discount Percentage

- Availability Status

- Store / Warehouse ID

- City & Pin Code Mapping

- Delivery ETA

- Product Images

- Ratings (limited but useful)

- Tags (Best Seller, Trending, Value Pack)

Hyperlocal Nature of Blinkit Data

Blinkit data is location-dependent, meaning:

- Prices vary by city and pin code

- Product availability changes by warehouse

- Discounts fluctuate frequently

Any Blinkit API integration must be location-aware by design.

Architecture Overview: Blinkit Product Data API

Before writing a single line of code, define the system architecture.

High-Level Workflow

-

User Request

-

Blinkit Product Data API

-

Data Fetch Layer (Scraper / Crawler)

-

Parsing & Normalization Engine

-

Database / Cache

-

JSON / REST / GraphQL Response

Key Components

- Request Handler (API Gateway)

- Blinkit Data Extraction Engine

- Proxy & Rotation Manager

- Parsing & Validation Layer

- Data Storage Layer

- API Output Layer

Step 1: Location & Session Initialization

Blinkit requires location context to return correct product data.

How Location Is Handled

- City

- Latitude & Longitude

- Pin Code

- Store / Warehouse ID

Implementation Strategy

- Initialize a session with:

- Geo-coordinates

- Headers simulating Blinkit mobile/web app

- Persist session cookies per location

- Rotate sessions for different cities

This ensures:

- Correct pricing

- Accurate availability

- Reduced request failures

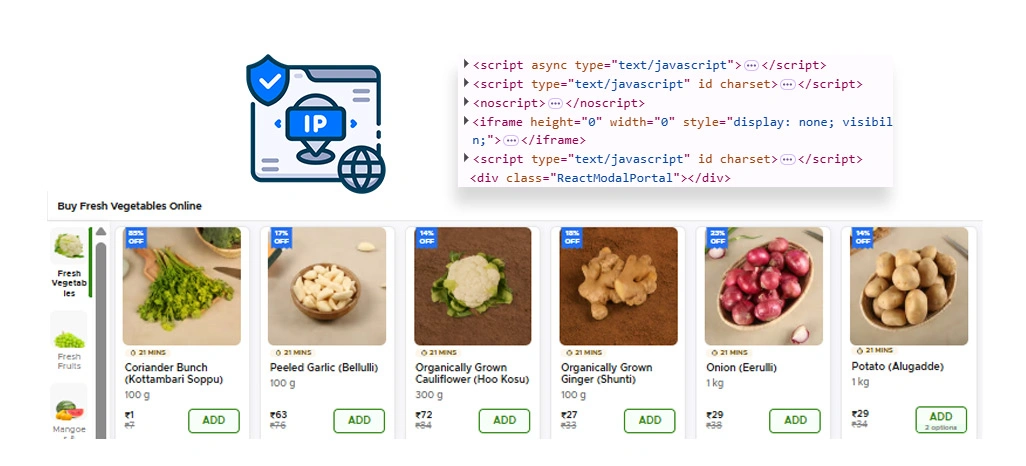

Step 2: Reverse Engineering Blinkit Data Sources

Blinkit product data is rendered via internal APIs called by the mobile/web application.

Common Data Endpoints (Conceptual)

- Product listing APIs

- Search result APIs

- Category browsing APIs

- Product detail APIs

Key Challenges

- Encrypted or dynamic parameters

- Session-based tokens

- Frequent endpoint changes

Best Practices

- Use browser/app traffic analysis tools

- Track network calls during:

- Search

- Category browsing

- Product detail clicks

- Abstract endpoint logic into reusable modules

Avoid hardcoding endpoints—build adaptable request handlers.

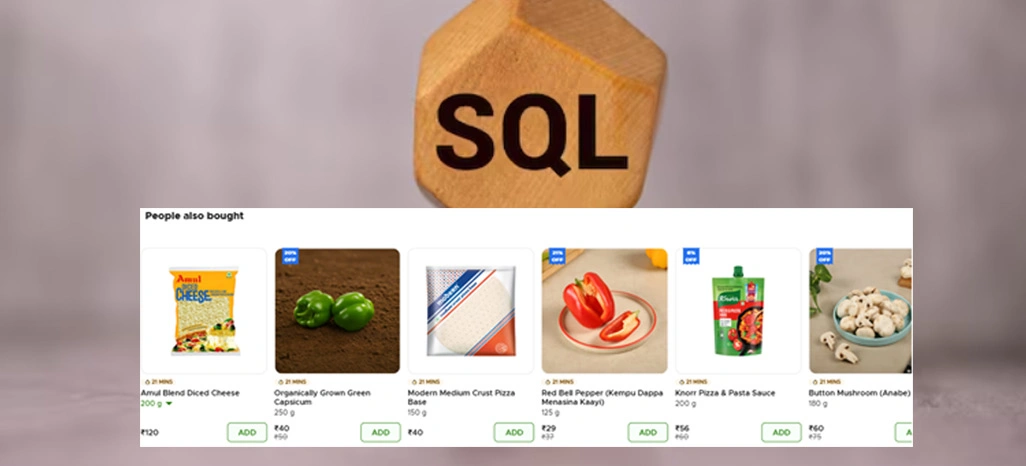

Step 3: Building the Data Extraction Layer

This is the core engine of your Blinkit Grocery Data Scraping API.

Extraction Techniques

- HTTP request-based scraping (preferred)

- Headless browser fallback for edge cases

- Pagination handling for large categories

- Incremental crawling for frequent updates

Key Focus Areas

- Rate-limiting controls

- Request throttling

- Intelligent retries

- Error classification (403, 429, soft blocks)

Proxy & IP Strategy

- Mobile or residential IPs

- City-specific IP pools

- Automatic IP rotation

- Header randomization

Step 4: Parsing & Normalizing Blinkit Product Data

Raw Blinkit responses are often nested and inconsistent.

Normalization Goals

- Convert raw JSON into structured schema

- Maintain consistency across categories

- Standardize price fields

- Normalize units (grams, kg, ml, liters)

Example Normalized Product Schema

{

"platform": "Blinkit",

"city": "Mumbai",

"pincode": "400001",

"category": "Fruits & Vegetables",

"product_name": "Fresh Onion",

"brand": "Local",

"variant": "1 kg",

"mrp": 60,

"selling_price": 48,

"discount_percentage": 20,

"availability": "In Stock",

"delivery_eta": "8 mins",

"last_updated": "2026-01-20T10:45:00Z"

}

Data Validation Rules

- Remove duplicate SKUs

- Flag price anomalies

- Detect sudden availability drops

- Log missing fields

Step 5: Designing the Blinkit Product Data API

Now expose the cleaned data through a developer-friendly API.

API Types You Can Offer

- REST API (most common)

- GraphQL API (advanced filtering)

- Bulk Data Feeds (CSV / JSON)

Common API Endpoints

GET/blinkit/products

GET/blinkit/products/search

GET/blinkit/products/category

GET/blinkit/prices/compare

GET/blinkit/availability

Query Parameters

- city

- pincode

- category

- brand

- price_min

- price_max

- in_stock

- last_updated

Step 6: Real-Time vs Scheduled Data Updates

Real-Time API

Best for:

- Price comparison tools

- Consumer apps

- Dynamic pricing engines

Challenges:

- Higher infrastructure cost

- Rate limits

Scheduled Crawling

Best for:

- Market research

- Historical analysis

- Trend reporting

Typical frequencies:

- Every 15 minutes (prices)

- Hourly (availability)

- Daily (catalog updates)

A hybrid model works best.

Step 7: Data Storage & Caching Strategy

Storage Options

- Relational DB (PostgreSQL, MySQL)

- NoSQL (MongoDB, DynamoDB)

- Time-series DB for price history

Caching Layer

- Redis / Memcached

- Location-wise cache keys

- TTL-based invalidation

This reduces:

- Load on Blinkit

- API response time

- Infrastructure cost

Step 8: Security, Compliance & Ethical Scraping

Security Measures

- API authentication (API keys / OAuth)

- Rate limiting per client

- Request logging & monitoring

Ethical & Legal Considerations

- Respect robots policies where applicable

- Avoid personal user data

- Use data for analytics, not replication

- Provide aggregated insights, not raw misuse

Step 9: Monitoring, Alerts & Maintenance

Blinkit changes frequently. Your API must adapt.

Monitoring Metrics

- Success vs failure rates

- Price change frequency

- Block detection

- Data freshness

Alerts

- Endpoint failure alerts

- Abnormal price spikes

- Zero inventory anomalies

Continuous Improvement

- Auto-detect schema changes

- Modular endpoint updates

- Versioned API releases

Step 10: Monetizing Blinkit Product Data API

Pricing Models

- Pay-per-API call

- Monthly subscription

- Location-based plans

- Custom enterprise plans

Target Customers

- FMCG brands

- D2C companies

- Retail analytics firms

- Investment research teams

- AI pricing platforms

Advanced Enhancements

Once the base API is live, you can add:

- Price Index Creation

- Blinkit vs Instamart vs Zepto Comparison

- Private Label Share Analysis

- Discount Depth Intelligence

- Demand Heatmaps

- AI-based Price Prediction Models

Conclusion

Building a Blinkit Product Data API Integration is no longer just a technical exercise—it is a strategic retail intelligence initiative. As quick-commerce platforms evolve with hyperlocal pricing, rapid inventory changes, and dynamic discounts, businesses need reliable, real-time access to structured product data to stay competitive.

By following a step-by-step approach—covering location-aware data extraction, scalable scraping architecture, robust normalization, and secure API delivery—organizations can transform raw Blinkit data into actionable insights for pricing intelligence, assortment optimization, and market benchmarking.

Solutions like Retail Scrape play a critical role in this ecosystem by enabling enterprise-grade Blinkit data extraction and API integration, helping brands, retailers, and analytics teams monitor prices, track availability, and analyze quick-commerce trends at scale. When combined with strong compliance practices, monitoring systems, and performance optimization, Retail Scrape–powered APIs become a long-term data asset rather than a one-time integration.

In today’s fast-moving retail environment, Blinkit data APIs—built the right way—are mission-critical tools, and platforms like Retail Scrape ensure that businesses can convert quick-commerce data into sustained competitive advantage.